Google I/O Unveils AI Advances, But Hardware Takes a Backseat

Google’s AI Ambitions Take Center Stage at I/O

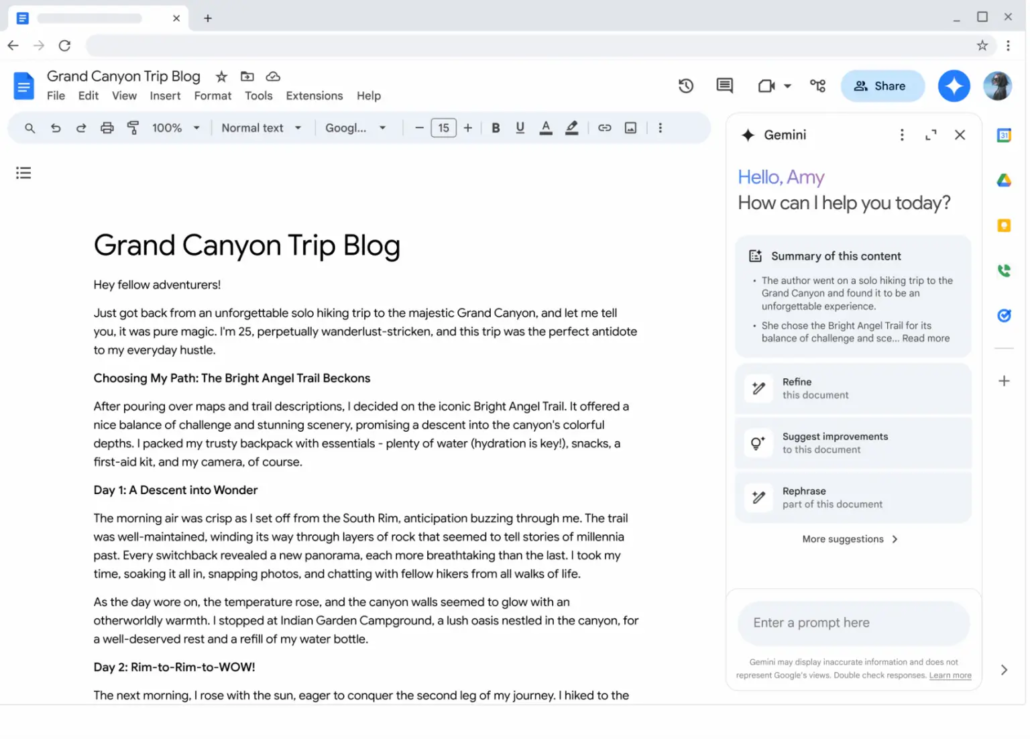

At the recent Google I/O developer conference, Google showcased why it’s leading the pack in AI technology, both to developers and curious consumers alike. The event featured an array of announcements: a revamped AI-driven search engine, an AI model capable of understanding up to 2 million tokens, and AI enhancements across Google Workspace apps such as Gmail, Drive, and Docs. Additionally, tools for embedding Google’s AI into developers’ apps were introduced, along with a glimpse into the future with Project Astra, an ambitious initiative that aims to integrate responses to sight, sound, voice, and text.

Each innovation, promising in its own right, contributed to a deluge of AI news that, while targeted at developers, also sought to impress everyday tech users with the potential of these advancements. However, the sheer volume of information presented might leave even the more tech-savvy spectators puzzled. Questions might arise like: What exactly is Astra? How does it relate to Gemini Live? Is Gemini Live similar to Google Lens, or how does it differ from Gemini Flash? Are AI-powered glasses becoming a reality, or are they still a concept? And what are all these terms—Gemma, LearnLM, Gems? When will these innovations impact your daily digital tools like email and documents, and how can you utilize them?

For those in the know, these announcements are thrilling; for others, they might need a little more explanation. (If you’re looking for clarity, follow the links to learn more about each development.)

Despite the palpable enthusiasm from presenters and the audible cheers from Google employees at the conference, what seemed to be missing from Google I/O was a clear depiction of the impending AI revolution. If AI is destined to reshape technology as profoundly as the iPhone revolutionized personal computing, this event did not showcase that groundbreaking product.

The overall impression was that we are still in the nascent stages of AI development.

Offstage, there was an acknowledgment among Google employees that their AI projects are still works in progress. For example, during a demonstration of how AI could instantly generate a study guide and quiz from a document several hundred pages long—an undoubtedly impressive capability—it was noted that the quiz answers lacked citations. When pressed about the AI’s accuracy, a Google staff member conceded that while the AI usually gets it right, future iterations would include source citations for fact-checking. This admission raises questions about the current reliability of AI-generated study materials for academic preparation.

In another demo, Project Astra featured a camera set up over a table linked to a large touchscreen, which allowed interactions like playing Pictionary with AI, identifying objects, asking questions about them, and having the AI weave stories. However, the practical applications of these functionalities in everyday life remained unclear, despite their standalone technological impressiveness.

For instance, during the livestreamed keynote, when asked to use alliteration to describe an object, Astra saw a set of crayons and promptly coined “creative crayons colored cheerfully”—a neat party trick indeed.

At #GoogleIO, we got a private test of Project Astra (not sure I was allowed to film this?). @skirano talking to Astra while it identified what it was looking at. Full multimodal, no lag, super smooth. Insane. pic.twitter.com/ayWPZRiEYq

— Conor Grennan (@conorgrennan) May 15, 2024

In a more private setting, I challenged Astra to identify objects from my rudimentary drawings on a touchscreen. It instantly recognized a flower and a house I sketched. However, when I attempted to draw a bug—depicting it with a large circle for the body, a smaller circle for the head, and little legs protruding from the body—the AI hesitated. “Is it a flower?” No. “Is it the sun?” Again, no. The Google employee suggested I guide the AI towards guessing a living creature. After adding two more legs for a total of eight, the AI finally guessed correctly: “Is it a spider?” Yes. In contrast, a human might have recognized the bug right away, despite my limited drawing skills.

To illustrate the current state of the technology, Google staff restricted any recording or photography during the Astra demo. Moreover, although Astra was operational on an Android smartphone, attendees were not allowed to interact with the app directly or even hold the device. While the demonstrations were entertaining and the underlying technology impressive, Google missed a critical opportunity to demonstrate how this AI could meaningfully impact everyday life.

One might question the practical applications of such demonstrations: When will you ever need an AI to concoct a band name from a picture of your dog and a stuffed tiger? Or, is it really necessary for an AI to assist in locating your glasses? These scenarios, also showcased during the keynote, highlight the whimsical but perhaps limited utility of the current applications of Google’s AI advancements.

This isn’t the first time we’ve seen a technology event showcase a vision of an advanced future without tangible real-world applications, or pitch minor conveniences as major upgrades. Google itself has a history of such demonstrations, having notably featured its AR glasses at past events. Remember when Google parachuted skydivers wearing Google Glass into an I/O event? That project, over a decade old, has since been discontinued.

After observing this year’s I/O, it seems Google views AI primarily as a new avenue for generating additional revenue—consider the push for Google One AI Premium to access product upgrades. This approach suggests that Google might not be the one to deliver the first major consumer AI breakthrough. Reflecting on this, OpenAI’s CEO Sam Altman recently speculated that while the original intent for OpenAI was to develop technology that could bring widespread benefits, it might instead be that others will use AI to create remarkable innovations from which we all benefit. Google appears to be in a similar position.

Nonetheless, there were moments when Google’s Astra AI showed more promise. For instance, if it could accurately identify code or offer suggestions for system improvements based on a diagram, its utility as a sophisticated work tool becomes more apparent—an evolved version of Clippy, if you will!!

There were indeed instances where the practicality of AI in the real world was evident at the conference. For example, an improved search tool for Google Photos and the integration of Gemini’s AI in your inbox, which can summarize emails, draft responses, and list action items, might help you achieve—or at least come closer to—the elusive inbox zero. However, can it also intelligently clear out unwanted but non-spam emails, organize emails into smart labels, ensure you never miss a critical message, and provide an actionable overview of your inbox upon login? What about summarizing key insights from your email newsletters? Not quite yet.

Furthermore, some of the more sophisticated features, such as AI-powered workflows and the demonstrated receipt organization, won’t be available in Labs until September.

When considering the impact of AI on the Android ecosystem—a key topic for developers at the event—there was a sense that even Google is not yet ready to argue convincingly that AI can lure users away from Apple’s ecosystem. When asked about the best time to switch from iPhone to Android, the general consensus among Google employees of various ranks was “this fall.” This suggests that significant updates are expected around Google’s fall hardware event, likely timed with Apple’s adoption of RCS, which could make Android’s messaging capabilities more competitive with iMessage.

In summary, while the adoption of AI in personal computing devices might eventually necessitate new hardware innovations—perhaps AR glasses, a more intelligent smartwatch, or Gemini-powered Pixel Buds—Google is not yet prepared to unveil these updates or even hint at them. And as the lackluster launches of Ai Pin and Rabbit have shown, mastering hardware remains a formidable challenge.

While Google’s AI technology on Android devices shows considerable promise, attention to its accessories like the Pixel Watch and the WearOS system powering it was notably scant at I/O, with only minor performance enhancements mentioned. Even Pixel Buds earbuds failed to receive any attention. In contrast, Apple effectively uses its accessories to solidify its ecosystem, potentially setting the stage for future integrations with an AI-powered Siri—these devices are not mere accessories but integral components of Apple’s strategy.

Awaiting Apple’s Move: Google I/O’s AI Strategy

Meanwhile, the tech world is poised for what might come next at Apple’s Worldwide Developer Conference (WWDC). This event is expected to reveal Apple’s approach to AI, possibly in partnership with companies like OpenAI or even Google. The question remains: will it truly compete, especially if Apple’s AI cannot integrate as deeply into its operating system as Gemini does with Android?

With a fall hardware event on the horizon, Google has the opportunity to observe Apple’s updates and possibly orchestrate its own defining AI moment, one that could rival the clarity and impact of Steve Jobs’ iconic iPhone introduction: “An iPod, a phone, and an Internet communicator… are you getting it?”

However, achieving that level of intuitive understanding and enthusiasm for Google’s AI initiatives proved elusive at this year’s I/O.

Don’t miss out on further developments—sign up for our new AI newsletter, launching June 5th, to keep updated right in your inbox.