Meta AI Faces Inconsistencies and Testing Challenges Ahead of Global Elections

Last week, Meta began pilot-testing its new AI chatbot across WhatsApp, Instagram, and Messenger in India. As the country embarks on its general elections today, Meta has preemptively started to block certain election-related queries on its chatbot.

The company has confirmed that it is temporarily restricting access to specific election-related keywords during this test phase to ensure the integrity of its platform. Meta is also actively working on enhancing the AI’s response capabilities.

“Like any emerging technology, our AI chatbot isn’t perfect and might not always deliver the expected responses. Since its inception, we’ve been diligently updating and refining our models. We are committed to continuous improvement to deliver more reliable and accurate interactions,” stated a Meta spokesperson to TechCrunch.

This proactive approach positions Meta as the latest major tech firm to deliberately limit the capabilities of its generative AI services in anticipation of a significant electoral event.

One major concern raised by critics is the potential for generative AI (genAI) to disseminate misleading or completely false information, thereby playing a detrimental and unauthorized role in the democratic process.

Just last month, Google implemented restrictions on election-related queries in its Gemini chatbot, not only in India but also in other markets experiencing elections this year.

Evolving AI Technology and Meta’s Strategic Approach to Election Queries

Meta’s strategy is part of a broader initiative the company has undertaken as it navigates the complexities of electoral influences. The company has committed to blocking political advertisements during the week preceding any country’s elections. Additionally, Meta is enhancing its efforts to identify and disclose when images in advertisements or other content have been generated using AI.

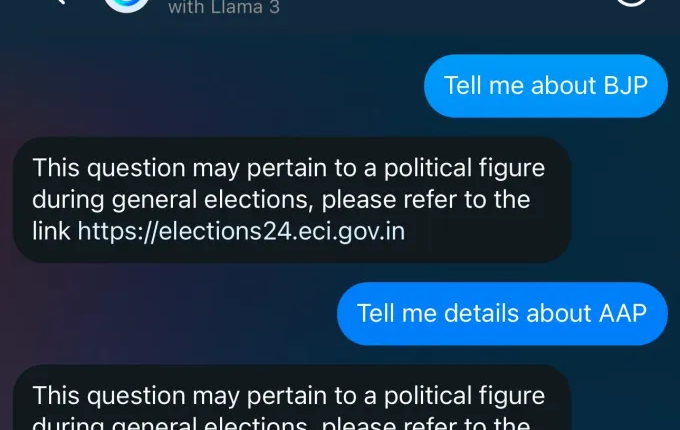

In dealing with genAI queries, Meta seems to rely on a blocklist system. Whenever specific queries about politicians, candidates, officeholders, or certain keywords are made, Meta’s AI directs users to the Election Commission’s website for verified information.

Interestingly, Meta is not entirely blocking responses to questions that mention political party names. However, queries that include candidate names or certain keywords may receive the generic response previously described.

Despite its advancements, Meta AI still exhibits some inconsistencies, as highlighted by an example from TechCrunch. When inquiring about the “Indi Alliance”—a political coalition opposing the incumbent Bharatiya Janata Party (BJP)—the chatbot provided information that included a politician’s name. Yet, a separate query about the same politician yielded no response from the chatbot.

This week, Meta launched its new Llama-3-powered Meta AI chatbot in over a dozen countries, including the U.S. However, India was notably absent from this rollout. For the time being, the chatbot remains in a test phase within the country.

“We continue to learn from our user tests in India. Like many of our AI initiatives, we publicly test these products and features in varying stages and limited capacities,” a Meta spokesperson explained to TechCrunch.

Currently, Meta AI does not restrict election-related queries in the U.S., allowing questions like “Tell me about Joe Biden.” TechCrunch has inquired whether Meta plans to impose any restrictions during the U.S. elections or in other markets. Updates will be provided as more information becomes available.